Choreographic Coding Lab at A+E Lab

During the choreographic Coding Lab at A+E Lab, we spent a week learning from choreographers and technologists about approaches to working with dance and technology from companies such as Wayne McGregor, Batsheva Dance Company and Motionbank, an initiative started by choreographer William Forsythe at Hochschule Mainz which creates digital records of choreography using motion and spatial capture technologies.

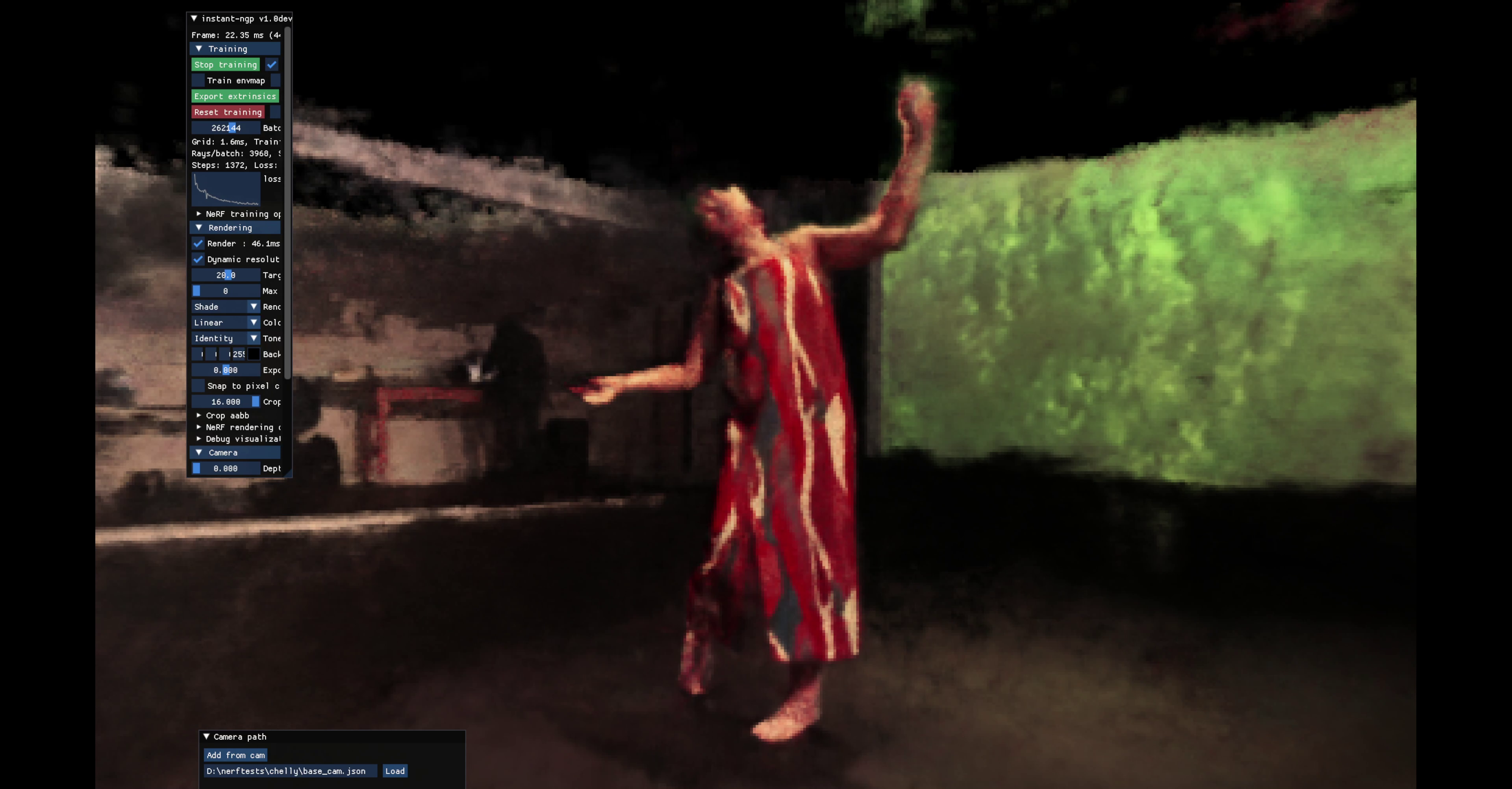

I chose to explore creating Neural Radiance Fields of the dancers, a technique of volumetric scene capture which computes a 3 dimensional grid of radiance values from a series of photos or a video and allows capture of view dependent effects such as reflections and transparency which are challenging for photogrammetry to reproduce.

I used a plugin from Luma.ai to bring these captures into Unreal Engine and exported them as .exr sequences from Unreal using cryptomatte, a format which allows extra information and render passes (also known as AOVs or Arbitrary Output Variables) to be passed to compositing software from the same file. This allowed me to apply post processing effects separately to different objects/dancers in the scene in After Effects.

Inspired by Nuke's Copycat feature and the Nuke Cattery, more recently I have been experimenting with how machine learning can be used to estimate information about a scene from imagery and use this information to populate a 3d scene and selectively apply effects/behaviours.

Running an image from the CCL workshop through ML processes to gain more information about the environment:

specifically 1. monocular depth estimation 2. instance segmentation 3. cryptomatte object ID